【文章摘要】Integrating superpixel segmentation into convolutional neural networks is known to be effective in enhancing the accuracy of land-cover classification. However, most of existing methods accomplish such integration by focusing on the development of newnetwork architectures,which suffer from several flaws: conflicts between general superpixels and semantic labels introduce noise into the training, especially at object boundaries; absence of training guidance for superpixels leads to ineffective regional feature learning; and unnecessary superpixel segmentation in the testing stage not only increases the computational burden but also incurs jagged edges. In this study, we propose a novel semanticaware region (SARI) loss to guide the effective learning of regional features with superpixels for accurate land-cover classification. The key idea of the proposed method is to reduce the feature variance inside and between homogeneous superpixels while enlarging feature discrepancy between heterogeneous ones. The SARI loss is thus designed with three subparts, including superpixel variance loss, intraclass similarity loss and interclass distance loss. We also develop semantic superpixels to assist in the network training with SARI loss while overcoming the limitations of general superpixels. Extensive experiments on two challenging datasets demonstrate that the SARIloss can facilitate regional feature learning, achieving state-of-the-art performance with mIoU scores of around 97.11% and 73.99% on Gaofen Image dataset and DeepGlobe dataset, respectively.

【文章信息】Zheng, X.; Ma, Q.; Huan, L.; Xie, X.; Xiong, H.; Gong, J. Semantic-Aware Region Loss for Land-Cover Classification. Remote Sens. IEEE JOURNAL OF SELECTED TOPICS IN APPLIED EARTH OBSERVATIONS AND REMOTE SENSING, VOL. 16, 2023. https://creativecommons.org/licenses/by/4.0

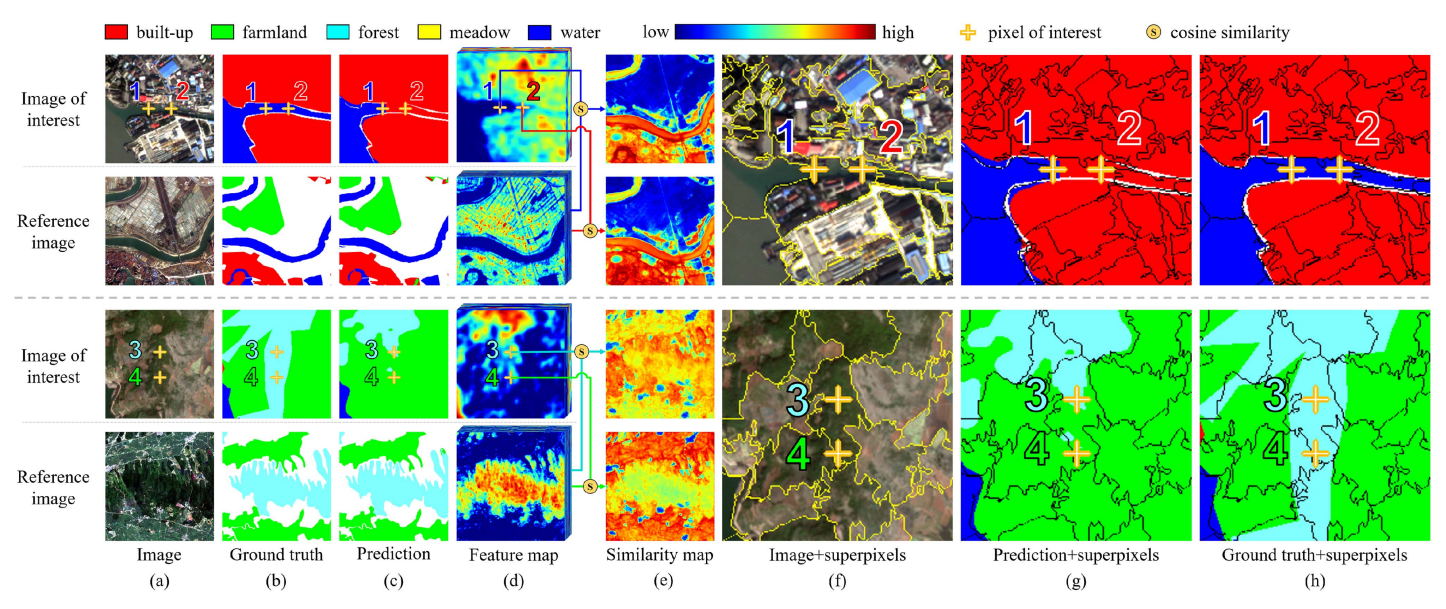

Feature comparison between congeneric pixels with visual consistency but different predictions. In the similarity maps in column (e), the warmer the color, the more similar are the features. Column (e) reveals the feature difference between the pixels of interest, which provides an observation on that why misclassification happens. Columns (f)–(h) indicate that superpixels can group visually similar pixels into regions and have the potential to improve the land-cover classification results.